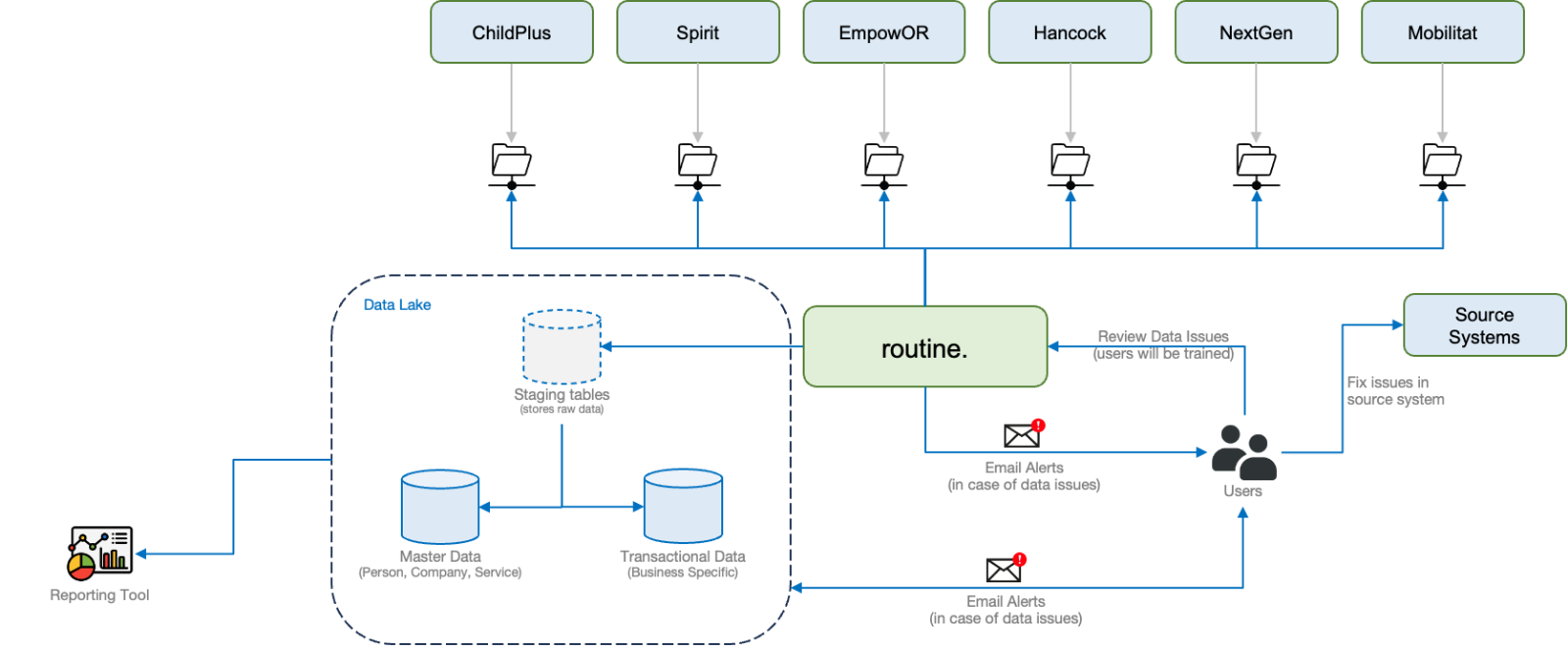

Situation

This community-based non-profit organization has 6 divisions that spans over Children & Family, Home Ownership & Repair, Economic Opportunity, Transportation, Heat Energy & Fuel and Healthcare. Each of the divisions use separate systems to perform their individual functions. All of these systems are independent SAAS solutions that does not talk to each other. That means if an individual is served by more than 1 division, a new contact has to be created with all details entered manually. All the divisions operate independently using their systems with no opportunity to collaborate among each other. This leads to 3 main concerns:

- Quality of data within each system is questionable but not quantifiable – Data Quality issues have to be identified and fixed

- Every systems works independently and so there is no data collaboration among different divisions

- Above 2 concerns are blocker for accurate and elaborate reporting that is a hindrance for efficient decision making